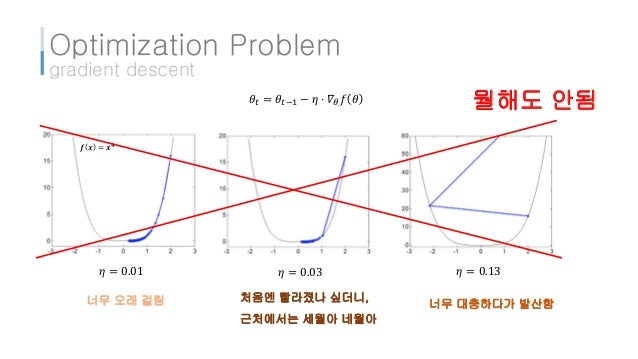

Supervised ML theory and the importance of optimum finding Gradient descent and its variants Limitations of SGD. Adam was presented by Diederik Kingma from OpenAI and Jimmy Ba from the University of Toronto in their 2015 ICLR paper poster titled Adam.

The name Adam is derived from adaptive moment estimation.

Adam a method for stochastic optimization. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions. The method is straightforward to implement and. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order moments.

The method is straightforward to implement is computationally efficient has little memory requirements is invariant to diagonal rescaling of the gradients and is well suited for problems that are large in terms of data andor parameters. We introduce Adam an algorithm for rst-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order mo-ments. The method is straightforward to implement is computationally efcient has little memory requirements is invariant to.

We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order moments. The method is straightforward to implement is computationally efficient has little memory requirements is invariant to diagonal rescaling of the gradients and is well suited for problems that are large in terms of data andor. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order moments.

The method is straightforward to implement is computationally efficient has little memory requirements is invariant to diagonal rescaling of the gradients and is well suited for problems that are large in terms of data andor. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order moments. We proposeAdam a method for efficient stochastic optimization that only requires first-order gra- dients with little memory requirement.

The method computes individual adaptive learning rates for different parameters from estimates of first and second moments of the gradients. The nameAdam is derived from adaptive moment estimation. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions.

The method is straightforward to implement and is based on adaptive estimates of lower-order moments of the gradients. The method is computationally efficient has little memory requirements and is well suited for problems that are large in terms of data andor parameters. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order moments.

We propose Adam a method for efficient stochastic optimization that only requires first-order gradients with little memory requirementThe method computes individual adaptive learning rates for different parameters from estimates of first and second moments of the gradients. The name Adam is derived from adaptive moment estimation. Our method is designed to combine the advantages of two.

We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order moments. The method is straightforward to implement is computationally efficient has little memory requirements is invariant to diagonal rescaling of the gradients and is well suited for problems that are large in terms of data andor. A Method for Stochastic Optimization.

The 3rd International Conference for Learning Representations San Diego. Adam is a stochastic gradient descent algorithm based on estimation of 1st and 2nd-order moments. The algorithm estimates 1st-order moment the gradient mean and 2nd-order moment element-wise squared gradient of the gradient using exponential moving average and corrects its bias.

A Method for Stochastic Optimization. Presented by Dor Ringel. Supervised ML theory and the importance of optimum finding Gradient descent and its variants Limitations of SGD.

Earlier approachesBuilding blocks. A Method for Stochastic Optimization by D. We introduce Adam an algorithm for first-order gradient-based optimization of stochastic objective functions based on adaptive estimates of lower-order moments.

The method is straightforward to implement is computationally efficient has little memory requirements is invariant to diagonal rescaling of the gradients and is well suit. Adam was presented by Diederik Kingma from OpenAI and Jimmy Ba from the University of Toronto in their 2015 ICLR paper poster titled Adam. A Method for Stochastic Optimization.

I will quote liberally from their paper in this post unless stated otherwise. Adam is the stochastic first order optimization algorithm that uses historical information about stochastic gradients and incorporates it in attempt to estimate second order moment of stochastic gradients.