Wood Computer Sciences Department University of Wisconsin-Madison alaa davidcswiscedu Abstract Modern processors use two or more levels of cache memories to bridge the rising disparity between processor Alaa R. Modern processors use two or more levels of cache memories to bridge the rising disparity between processor and memory speeds.

Adaptive Cache Management for Energy-efficient GPU Computing Xuhao Chenzy Li-Wen Changy Christopher I.

Adaptive cache compression for high performance processors. Adaptive Cache Compression for High-Performance Processors Alaa R. Alameldeen and David A. Wood Computer Sciences Department University of Wisconsin-Madison alaa davidcswiscedu Abstract Modern processors use two or more levels of cache memories to bridge the rising disparity between processor and memory speeds.

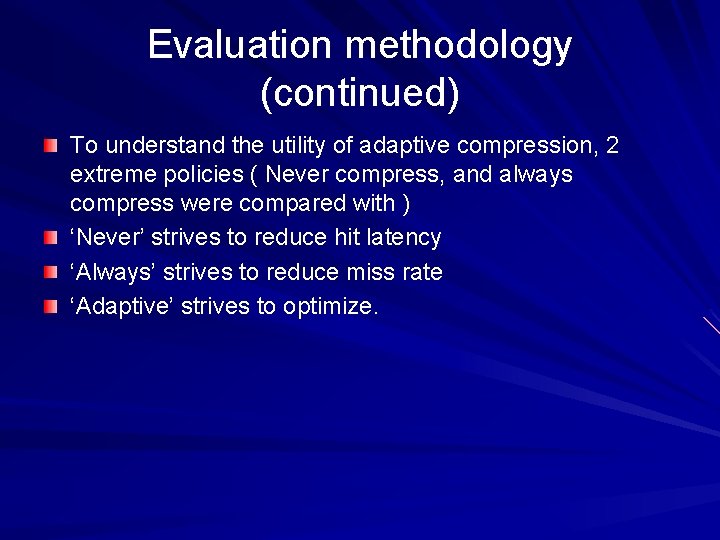

Compression canimprove cache performance by increasing effectivecache capacity and eliminating misses. Howeverdecompressing cache lines also increases cache accesslatency potentially degrading performanceIn this paper we develop an adaptive policy thatdynamically adapts to the costs and benefits of cachecompression. Adaptive cache compression for high-performance processors Abstract.

Modern processors use two or more levels of cache memories to bridge the rising disparity between processor and memory speeds. Compression can improve cache performance by increasing effective cache capacity and eliminating misses. Modern processors use two or more levels of cache memories to bridge the rising disparity between processor and memory speeds.

Compression can improve cache performance by increasing effective. This paper proposes an adaptive scheme for performing cache compression using a combination of LRU and predictors. Cache compression serves the purpose of increasing the effective cache size allowing more data to be cached at the certain level which increases the chance that a request will hit the cache.

Modern processors use two or more levels of cache memories to bridge the rising disparity between processor and memory speeds. Compression can improve cache performance by increasing effective cache capacity and eliminating misses. However decompressing cache lines also increases cache access latency potentially degrading performance.

Request PDF On Mar 2 2004 Alaa R. Alameldeen and others published Adaptive Cache Compression for High-Performance Processors Find read. Adaptive cache compression for high-performance processors articleAlameldeen2004AdaptiveCC titleAdaptive cache compression for high-performance processors authorAlaa R.

31st Annual International Symposium on Computer Architecture 2004 year2004 pages212-223. Adaptive cache compression for high-performance processors - Computer Ar chitecture 2004. 31st Annual International Symposium on.

Sorry we are unable to provide the full text but you may find it at the following locations. We evaluate adaptive cache compression using full-system simulation and a range of benchmarks. We show that compression can improve performance for memory-intensive commercial workloads by up to 17.

However always using compression hurts performance for low-miss-rate benchmarksdue to unnecessary decompression overheaddegrading performance by up to 18. Adaptive Cache Compression for High-Performance Processors Alaa R. Alameldeen and David A.

Wood Computer Sciences Department University of Wisconsin-Madison alaa davidcswiscedu Abstract Modern processors use two or more levels of cache memories to bridge the rising disparity between processor Alaa R. Alameldeen and David A. Are shown to be manageable and do not affect compressed caching performance so severely as stated in previous studies.

Furthermore the adaptive compressed caching is revised in a period when the main reason that motivated this idea the gap between CPU processing power and disk access times has never been so wide. Adaptive Cache Management for Energy-efficient GPU Computing Xuhao Chenzy Li-Wen Changy Christopher I. Rodriguesy Jie Lvy Zhiying Wangz and Wen-Mei Hwuy State Key Laboratory of High Performance Computing National University of Defense Technology Changsha China.

ENHANCEMENT OF CACHE PERFORMANCE IN MULTI-CORE PROCESSORS A THESIS Submitted by MUTHUKUMARS Under the Guidance of Dr. JAWAHAR in partial fulfillment for the award of the degree of DOCTOR OF PHILOSOPHY in ELECTRONICS AND COMMUNICATION ENGINEERING BSABDUR RAHMAN UNIVERSITY BS. ABDUR RAHMAN INSTITUTE OF SCIENCE.

1 Cache compression requires hardware that can decompress a word in only a few CPU clock cycles. This rules out software implementations and has great influence on compression algorithm design. 2 Cache compression algorithms must be lossless to m aintain correct microprocessor operation.

3 The block size for cache compression. Adaptive Data Compression for High-Performance Low-Power On-Chip Networks. Yuho JinKi Hwan YumEun Jung Kim Department of Computer Science Texas AM University University of Texas at San Antonio College Station TX 77843 USA San Antonio TX 78249 USA.

Have reported that cache compression can improve the performance of uniprocessors by up to 17 for memory-intensive commercial workloads 1 and up to 225 for memory-intensive scientific workloads 2. Researchers have also found that cache compression and prefetching techniques can improve CMP throughput by 10 51 3. Compression for High-Performance Processors.

A Signi cance-Based Compression Scheme for L2 Caches. Interactions between compression and prefetching in chip multiprocessors.