Data fusion is the process of getting data from multiple sources in order to build more sophisticated models and understand more about a project. Therefore it is beneficial to include more data in the refinement.

Schema refinement is just a fancy term for saying polishing tables.

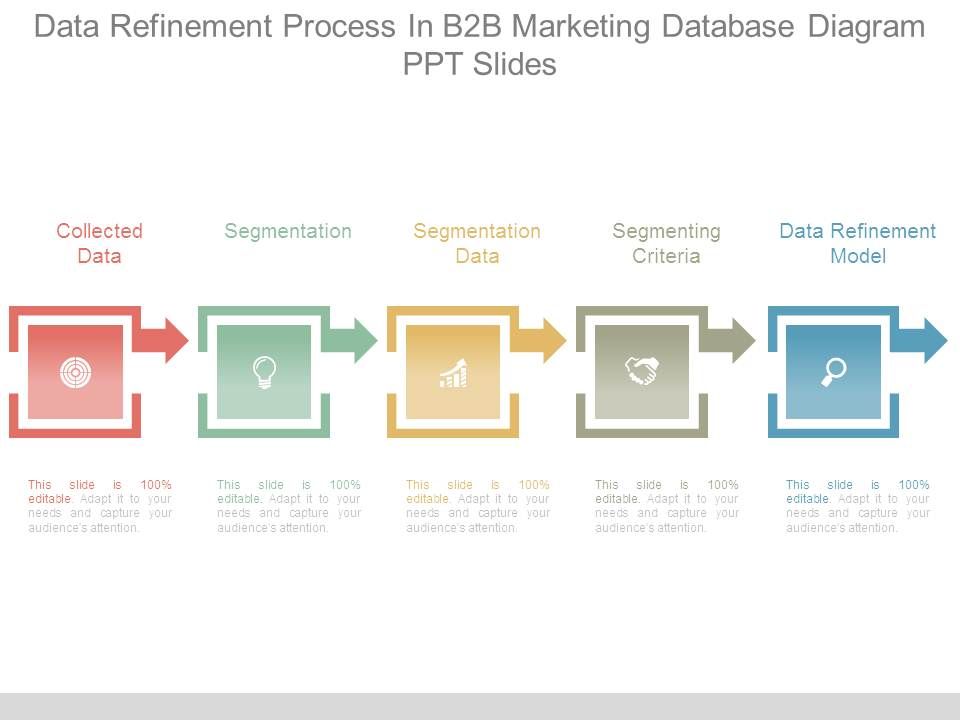

What is data refinement. Serious data scientists need to make data refinement their first priority and break down the data work into three steps. Data Refining is a process that refines disparate data within a common context to increase the awareness and understanding of the data remove data variability and redundancy and develop an integrated data resource. So as data refining.

Refinement is a generic term in computer science which actually describes various approaches with the goal of producing computer understandable corrected programs and simplifying programs. Data refinement converts raw data to the specification of needed format by a software or implementable program. First and foremost it means to be data-driven in our planning and execution.

To make informed decisions based on data and research. In this series we examine how to become a more data-driven communications professional. In the previous post we proved this hypothesis false.

Trustworthy content is shared more on social media. Use Data Refinery to cleanse and shape tabular data with a graphical flow editor. You can also use interactive templates to code operations functions and logical operators.

When you cleanse data you fix or remove data that is incorrect incomplete improperly formatted or duplicated. That new data architecture identifies a businesss needs collects relevant and accurate data and makes it accessible to the right people in the right context. Intro to Database Systems.

Schema Refinement - Functional Dependencies. Schema refinement is just a fancy term for saying polishing tables. It is the last step before considering physical designtuning with typical workloads.

1 Requirement analysis. 2 Conceptual design. High-level description often using ER diagrams.

TOPIC DISCUSSION - Data for Refinement and DepositionPublication Xinhua Ji NCI. High-resolution data even not complete always helps improve electron density that reveals additional structure features. Therefore it is beneficial to include more data in the refinement.

Backlog Refinement is an important but poorly understood part of Scrum. It deals with understanding and refining scope so that it can be ready to be put into a sprint for a development team to turn into a product increment. Good backlog refinement involves a regular team discussion plus informal ad hoc discussions.

They begin with an explanation of the fundamental notions showing that data refinement proofs reduce to proving simulation. The books second part contains a detailed survey of important methods in this field which are carefully analysed and shown to be either incomplete with counterexamples to their application or to be always applicable whenever data refinement holds. Data preparation is the process of preparing and providing data for data discovery data mining and advanced analytics.

The goal of data preparation is to support business analysts and data scientists by preparing different kinds of data for their analytical purposes. Data fusion is the process of getting data from multiple sources in order to build more sophisticated models and understand more about a project. It often means getting combined data on a single subject and combining it for central analysis.

Viele übersetzte Beispielsätze mit data refining Deutsch-Englisch Wörterbuch und Suchmaschine für Millionen von Deutsch-Übersetzungen. Data munging is the initial process of refining raw data into content or formats better-suited for consumption by downstream systems and users.