The atomic world is a two-way street. Computed entropies correlated closely with measured values.

Entropy arises when we describe a system by means of unobservable microstates and the entropy of a macrostate is a measure of how many microstates are conflated in the same macrostate.

What is the origin of entropy. Entropy n 1868 from German Entropie measure of the disorder of a system coined 1865 on analogy of Energie by German physicist Rudolph Clausius 1822-1888 in his work on the laws of thermodynamics from Greek entropia a turning toward from en in see en-2 trope a turning a transformation from PIE root trep-to turn. The notion is supposed to be transformation contents. Entropy arises when we describe a system by means of unobservable microstates and the entropy of a macrostate is a measure of how many microstates are conflated in the same macrostate.

Entropy A Key Concept for All Data Science Beginners Introduction. Entropy is one of the key aspects of Machine Learning. It is a must to know for anyone who wants to make a.

The Origin of Entropy. The term entropy was first coined by the German physicist and mathematician Rudolf Clausius and. Nobody knows what entropy is because so far nobody has presented us with a true definition for entropy.

Currently entropy can be anything that you want it to be. There is NO standardized definition for entropy. There are literally dozens of different contradictory self-defeating and mutually exclusive definitions for entropy.

Density functional theory computational methods were used to calculate the entropies of various molecules. Computed entropies correlated closely with measured values. For organic systems an average of 84 kcalmol for the reaction entropy one particle to two at 29815 K was observed.

This value is largely determined by translational entropy gain. Based on the etymology German Entropie. See en- Greek trope transformation we understand that the word ENTROPY originated in Greek and came into English via German.

Log in for more information. Added 6302014 91945 AM. With its Greek prefix en- meaning within and the trop-root here meaning change entropy basically means change within a closed system.

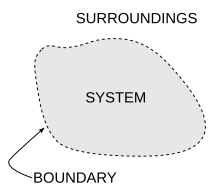

The closed system we usually think of when speaking of entropy especially if were not physicists is the entire universe. But entropy applies to closed systems of any size. Entropy is seen when the ice in a glass of water in a warm room meltsthat is as the.

Research concerning the relationship between the thermodynamic quantity entropy and the evolution of life began around the turn of the 20th century. In 1910 American historian Henry Adams printed and distributed to university libraries and history professors the small volume A Letter to American Teachers of History proposing a theory of history based on the second law of thermodynamics and on the principle of entropy. Any body in the form of heatbut a struggle for entropy which becomes available through the transition of energy from the hot sun to the cold earth.

In The Mystery of Lifes Origin Thaxton Bradley explain how living organisms use biochemistry to produce a metastable environment which is not thermodynamically favorable since the systems low constraint-entropy is not combined with a high temperature-entropy by converting externally available energy into internally useful functions in a way that causes the overall entropy change in the universe. It is the basis for the operation of everything from the engine that launches a rocket into orbit to the biological machinery that runs every cell in your body. And what it means is that we know the whole system the whole universe must have started this energy transforming process when its battery was full.

Entropy is a measure of the randomness or disorder of a system. The value of entropy depends on the mass of a system. It is denoted by the letter S and has units of joules per kelvin.

Entropy can have a positive or negative value. According to the second law of thermodynamics the entropy of a system can only decrease if the entropy of another. Using the following etymology what is the origin of entropy.

Was asked on May 31 2017. View the answer now. We currently dont know but the significance of these features is potentially giving us a massive clue as to how to find a theory of quantum gravity.

The connection has lead some to conjecture and right now Im riding on thi. The Origin of Irreversibility. So theres a deep mystery lurking behind our seemingly simple ice-melting puzzle.

At the level of microscopic particles nature doesnt have a preference for doing things in one direction versus doing them in reverse. The atomic world is a two-way street. And yet for some reason when we get to large collections of atoms a one-way street emerges for the.

All Spontaneous Change in a System is accompanied by an increase in the Entropy of the System. This first formal statement of the Second Law in basically acknowledging Carnots original declaration states that spontaneous change occurring in one direction only is measurable by an increase in Claudius newly formulated theoretical quantity entropy.