Parallel Processing with Big Data 3 P Many big-data applications require a steady stream of new data to be input stored processed and output thus possibly straining the memory and IO bandwidths which tend to be more limited than computation rate. We need parallel processing for big data analytics because our data is divided into splits and stored on HDFS Hadoop Distributed File Systemso when we want for example to do some analysis on our data we need all of itthats why parallel processing is necessary to do this operationMapReduce is one of the most used solution that help us to do parallel processing.

Parallel processing is commonly known as the simultaneous use of more than one CPU to execute a program.

Why do we need parallel processing for big data analytics. We need parallel processing for big data analytics because our data is divided into splits and stored on HDFS Hadoop Distributed File Systemso when we want for example to do some analysis on our data we need all of itthats why parallel processing is necessary to do this operationMapReduce is one of the most used solution that help us to do parallel processing. Big data analytics needs parallel processing because the huge amounts of data are too big to handle on one processor. By running on numerous.

See full answer below. Parallel processing is a technique used by professionals and Data Scientists in computing in multiple processors that is CPUs that will help in better handling of separate parts of an overall project. Techniques like these are employed by professionals for faster and efficient processing of Big sets of data.

Parallel processing allows making quick work on a big data set because rather than having one processor doing all the work you split up the task amongst many processors. This is the largest benefit of parallel processing. Another advantage of parallel processing is when one processornode goes out another node can pick up from where that task last saved safe object task.

View week 7docx from ITECH 1103 at Federation University. ITECH1103 BIG DATA ANALYTICS Week 7 1. What is Parallel Processing.

Why do we need it for Big Data Analytics. - The simultaneous use. Parallel Processing with Big Data 3 P Many big-data applications require a steady stream of new data to be input stored processed and output thus possibly straining the memory and IO bandwidths which tend to be more limited than computation rate.

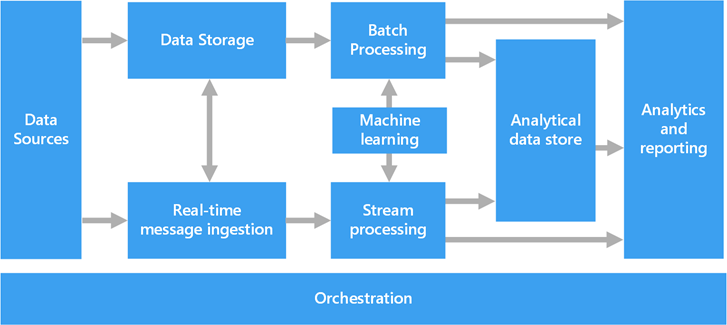

Parallel Processing Models Parallel processing architectures vary greatly. Parallel Processing Systems for Big Data. The volume variety and velocity properties of big data and the valuable information it contains have motivated the investigation of many new parallel data processing systems in addition to the approaches using traditional database management systems DBMSs.

What Does Parallel Data Analysis Mean. Parallel data analysis is a method for analyzing data using parallel processes that run simultaneously on multiple computers. The process is used in the analysis of large data sets such as large telephone call records network logs and web repositories for text documents which can be too large to be placed in a single relational database.

Big Data helps companies to generate valuable insights. Companies use Big Data to refine their marketing campaigns and techniques. Companies use it in machine learning projects to train machines predictive modeling and other advanced analytics applications.

We cant equate big data to any specific data volume. Big data deployments can involve terabytes petabytes and even exabytes of data. The Role of the Data Backbone.

The technology or technologies we decide to use for collection and perparation marks the the first Pillar of our system The Data Backbone. The data backbone is the entry point into our system. Its sole responsibility is to relay data to the other links in our data analytics platform.

Parallel and distributed computing is a matter of paramount importance especially for mitigating scale and timeliness challenges. This special issue contains eight papers presenting recent advances on parallel and distributed computing for Big Data applications focusing on. Hadoop is a distributed parallel processing framework which facilitates distributed computing.

Now to dig more on Hadoop we need to have understanding on Distributed Computing. This will actually give us a root cause of the Hadoop. In simple English distributed computing is also called parallel processing.

Lets take an example lets say we have a task of painting a room in our house and we. Why do we need it for Big Data Analytics. Parallel processing is commonly known as the simultaneous use of more than one CPU to execute a program.

It makes a program run faster because there are more engines CPUs running it. Parallel processing is a method in computing of running two or more processors CPUs to handle separate parts of an overall task. Breaking up different parts of a task among multiple.

Big data analytics is necessary because traditional data warehouses and relational databases cant handle the flood of unstructured data that defines todays world. They are best suited for structured data. They also cant process the demands of real-time data.

Big data analytics fills the growing demand for understanding unstructured data real time. This is particularly important for companies that rely on fast. Apache Spark is a unified computing engine and a set of libraries for parallel data processing on computer clusters.

As of the time of this writing Spark is the most actively developed open source engine for this task. Making it the de facto tool for any developer or data scientist interested in Big Data. Spark supports multiple widely used programming languages Python Java Scala and.